Opera Adds Experimental Support for 150 Local LLMs

Posted on April 3, 2024

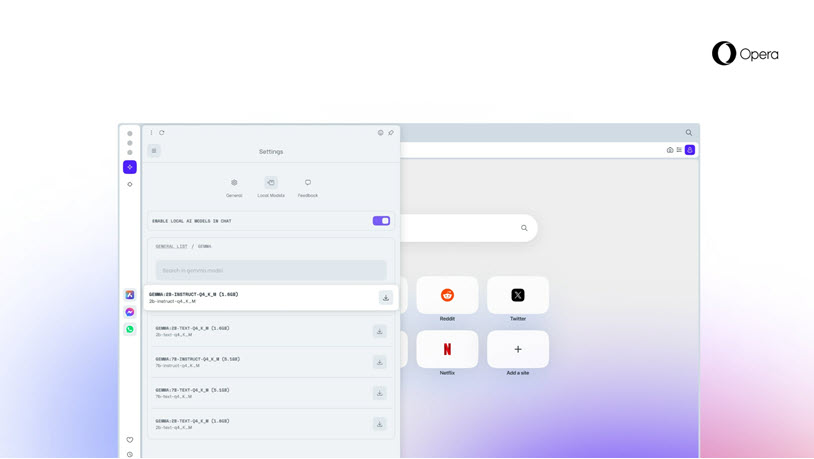

Opera announced today that is adding local LLM support to its Opera One browsers. Support will be available for 150 local LLM (Large Language Model) variants from approximately 50 families of models. Opera also offers an online AI service called Aria.

These are some of the local LLMs Opera One will support.

- Llama from Meta

- Vicuna

- Gemma from Google

- Mixtral from Mistral AI

Users will need to download the models to use them. The feature is part of the AI Feature Drops Program for Opera One. Here's an explanation of how it works from Opera:

As of today, the Opera One Developer users are getting the opportunity to select the model they want to process their input with. To test the models, they have to upgrade to the newest version of Opera Developer and follow several steps to activate the new feature. Choosing a local LLM will then download it to their machine. The local LLM, which typically requires 2-10 GB of local storage space per variant, will then be used instead of Aria, Opera's native browser AI, until a user starts a new chat with the AI or switches Aria back on.

TechCrunch notes that you can also try online AI tools like HuggingFace. You can also use ChatGPT now without an account.

Image: Opera

- Character.ai Provides AI Characters to Chat With

- Photobucket's Huge Bucket of Images of Interest to AI Companies

- Opera Adds Experimental Support for 150 Local LLMs

- Read AI Raises $21 Million in Series A Financing

- You Can Now Use ChatGPT Without an Account